10-31-Daily AI News Daily

AI News Daily 2025/10/31

AI News | Daily Briefing | Web Data Aggregation | Cutting-Edge Science Exploration | Industry Voice | Open Source Innovation | AI & Humanity’s Future | Visit Web Version | Join Community

Today’s Summary

NVIDIA launches NVQLink integrating quantum computing, Google introduces StreetReaderAI empowering the visually impaired.

Vercel boosts sales efficiency with AI agents, MiniMax releases low-latency speech synthesis Speech 2.6.

Sora 2 updates enhance creative interaction. OpenAI tech drastically cuts AI training costs.

Google's massive AI investment leads to a Gemini user surge. AI layoffs signal compute investment reshaping employment.

Medical AI diagnostics, intelligent agent memory management, and other technologies continue to advance, while AI applications face integration challenges.Product & Feature Updates

- NVIDIA just dropped a bombshell at GTC: NVIDIA NVQLink! 🤯 This open system architecture is designed to tightly couple GPU computing with quantum processors, aiming to build accelerated quantum supercomputers. This isn’t just an announcement; it’s a peek into the future of #quantum-GPU computing. Quantum computing is no longer an isolated island; it’s seamlessly integrating with classic high-performance computing, bringing immense power to the table 🤝.

Watch NVIDIA’s Blueprint for the Quantum Supercomputing Future

and witness the next big leap in the world of computing power! 🚀

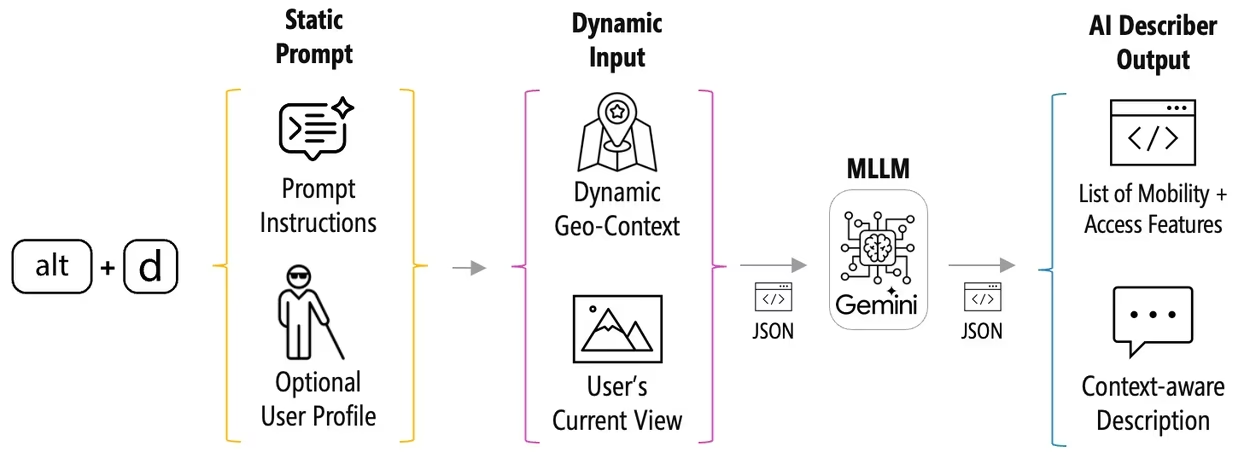

- StreetReaderAI just dropped from Google Research! This accessible Street View prototype system, powered by multimodal AI Gemini, empowers blind or low-vision folks to “understand” and explore Google Street View through voice interaction 🗺️. It’s like having a talking virtual guide, generating real-time voice descriptions, engaging in smart conversations, and supporting voice or keyboard navigation, making digital world exploration barrier-free! (o´ω’o)ノ This research isn’t just a huge leap for accessibility tech; it’s

A Deep Dive into How AI Can Bridge Sensory Gaps and Build Inclusive Digital Experiences

! ❤️

- MiniMax has unleashed its latest voice tech, Speech 2.6! 🗣️ This bad boy boasts latency under 250ms, smartly handles text like URLs and dates, delivers human-like voice effects, and even supports smooth mixed-language reading in over 40 languages. This version doesn’t just do voice cloning; it expresses rich emotions, making AI voices sound less like cold machines and more like warm conversations 🔥. Some users did grumble that the official demo video didn’t quite show off its emotional capabilities, making it

A Slight “Fumble”

, but that still can’t hide the huge potential of this tech! (´・ω・`)

- Sora’s app just got a major revamp! ✨ They’ve added a character creation feature, so users can now craft virtual characters and have them “star” in videos, making creation super personalized and fun. Plus, the draft page now supports splicing multiple videos for publishing, and the search page includes a leaderboard, helping quality content and creators stand out and fostering a vibrant community 👨👩👧👦.

These Updates Will Undoubtedly Further Ignite Users’ Creative Passion

, causing Sora 2’s daily active users to skyrocket again! 🚀

Cutting-Edge Research

- A breakthrough tech called “Online Policy Distillation” has just been unveiled by the lab led by former OpenAI CTO, Mira Murati! 🤯 This game-changing technique allows small models with just 8B parameters to rival the performance of massive 32B models, while slashing training costs by a whopping 90%. How? Through a “dense feedback per token” mechanism, the teacher model scores and guides every token generated by the student model in real-time, achieving an efficiency leap of 50-100x! Talk about an AI training revolution 🔥. This research doesn’t just crack the “catastrophic forgetting” puzzle; Its Lightweight Architecture Opens the Door for SMEs and Individual Developers to Train Specialized AI at Low Cost , pushing AI from a “giant’s game” towards a truly “universal tool”! 🚀

- How do you teach AI to “think when it needs to,” instead of overthinking every little thing? 🤔 A fresh paper introduces the TON strategy, which uses “thought discarding” and reinforcement learning to train Vision-Language Models (VLMs) to autonomously decide when to generate detailed reasoning processes 🧠. Experiments show that this method can slash generation length by up to 90% without sacrificing, or even improving, performance! This brings AI’s thinking patterns closer to the human blend of “intuition and deep thought.” This Research Paves a New Path for More Efficient and Human-like AI Reasoning Models , moving us one step closer to true intelligence! 💡

- UnifiedReward-Think is here! A new paper introduces this bad boy, the first unified multimodal “chain-of-thought” reward model. ✅ It evaluates visual understanding and generation tasks through multi-dimensional, long-chain step-by-step reasoning, making reward signals more reliable and robust. The model uses an exploration-driven reinforcement learning approach, cold-starting with reasoning processes distilled from GPT-4o, then fine-tuning with massive data to explore diverse reasoning paths and optimize solutions 💡. This research pretty much screams that Integrating Explicit Long-Chain Thinking into Reward Models is Key to Enhancing Their Reliability , opening up fresh avenues for model alignment! (✧∀✧)

- AI Medical Diagnosis just got a huge boost! 🩺 A new paper shows how integrating image analysis, thermal imaging, and audio signal processing can lead to early detection of major diseases like skin cancer, vascular thrombosis, and cardiopulmonary anomalies. Talk about an AI medical diagnosis “trident”! This framework, using fine-tuned models like MobileNetV2, Support Vector Machines, and Random Forests, achieved competitive accuracy rates on their respective tasks. Plus, the whole system is lightweight, perfect for deployment on low-cost devices 📱. This research offers a super promising blueprint for developing scalable, real-time, and easily accessible AI pre-diagnostic healthcare solutions, Making High-Quality Early Screening No Longer a Distant Dream ! ❤️

Industry Outlook & Social Impact

- Vercel, the cloud platform company, just put on a real-life “human-machine collaboration” show! 🤖 By training AI agents to mimic top salespeople’s workflows, they successfully slimmed down a 10-person sales team to just 1 human plus an AI robot. This AI agent automatically handles tedious tasks like email auditing, client screening, and information gathering, freeing up human employees to focus on more creative outreach. Talk about a massive leap in sales efficiency! 🚀 Vercel’s practice proves that AI is Not Just a Tool for Cost Reduction and Efficiency, But Also a Catalyst for Reshaping Organizational Structures and Work Models , suggesting human-AI collaboration will be way tighter in the future 🤔.

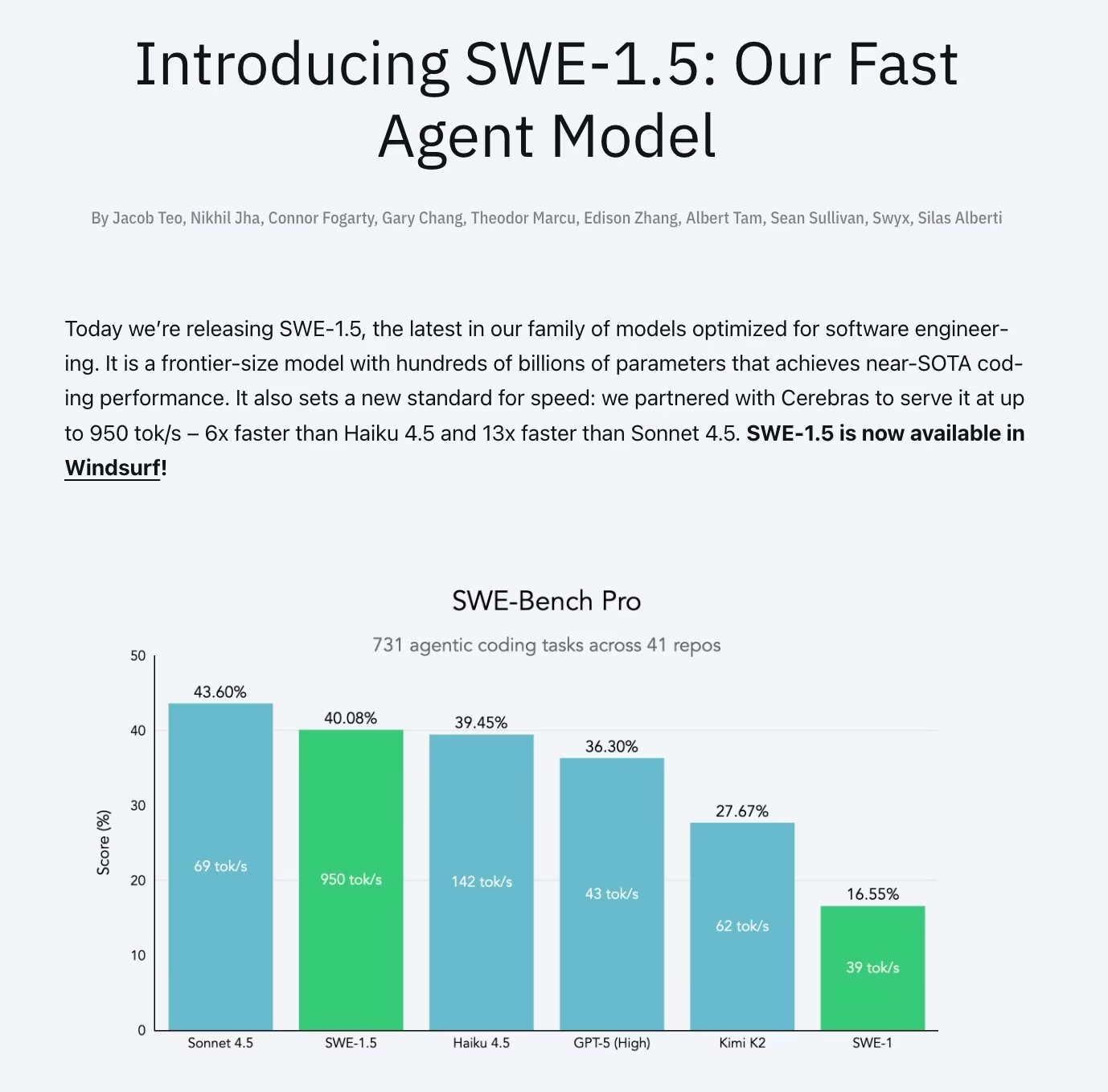

- Cognition AI just dropped SWE-1.5, a billion-parameter model optimized specifically for software engineering tasks! 💻 It’s designed to bridge the gap between “thinking speed” and “thinking depth” in AI coding tools. By unifying model optimization, inference engines, and agent frameworks, SWE-1.5 achieved near-top performance on the tough SWE-Bench benchmark, all while being several times faster—6x faster than Haiku 4.5 and a scorching 13x faster than Sonnet 4.5 🔥! This signals that AI coding tools are moving from “usable” to truly “good-to-use” production-grade applications,

Bringing Unprecedented Efficiency Revolution to Developers

! 🚀

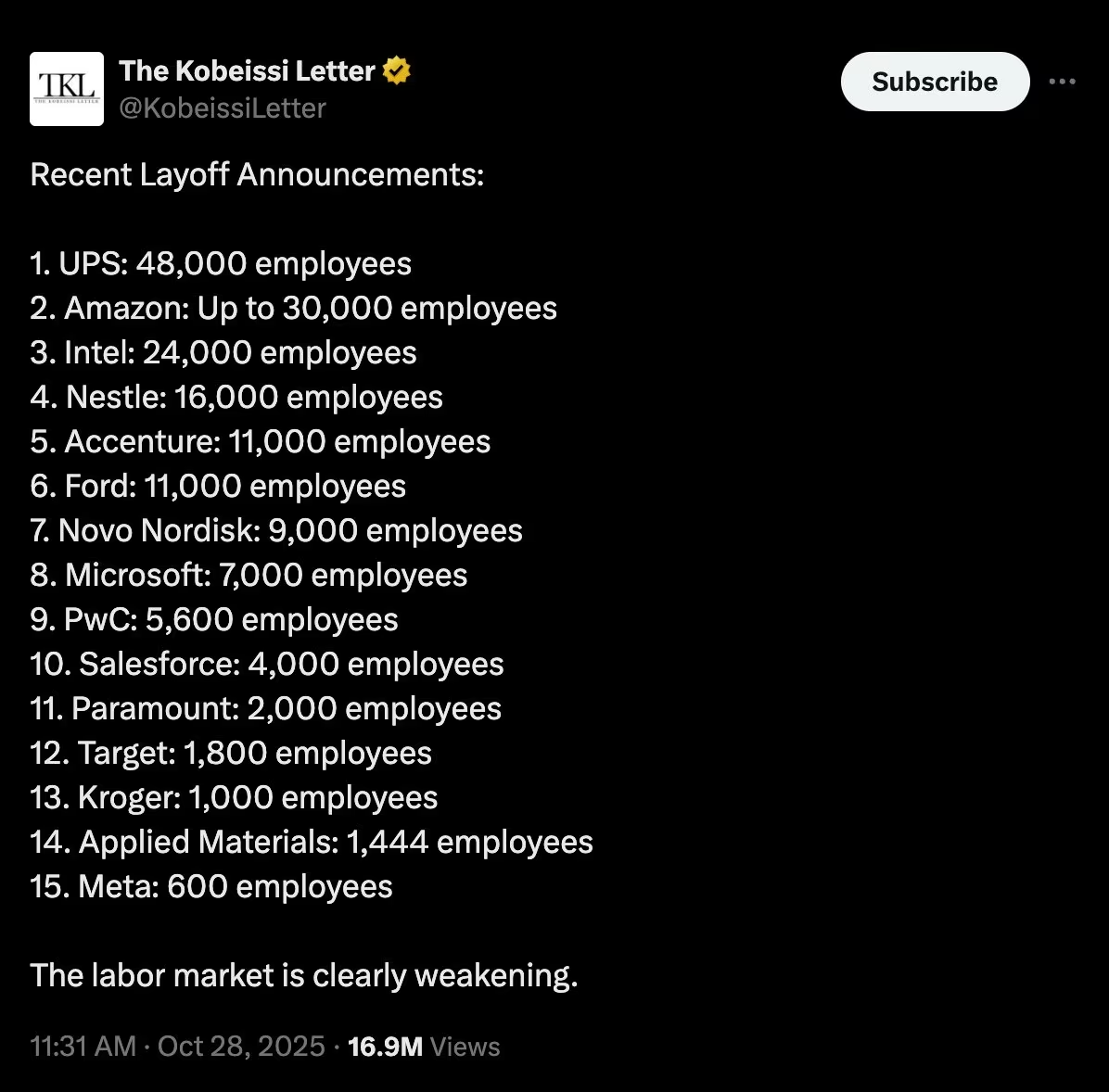

- The recent US layoff wave is hiding two totally different AI stories! 🧐 Tech giants are laying off folks to free up budget for GPUs, while traditional industries are trimming staff because AI tools have genuinely boosted productivity. The former are “buying shovels,” the latter are buying “gold dug with shovels,” and semiconductor companies are chilling in the middle, collecting rent from the entire value chain—a bizarre industrial cycle 🔄. This phenomenon reveals that wealth is concentrating in computing power at an unprecedented speed,

Rather Than Labor, the Position of Most Workers is Being Redefined

. This might not be an economic recession, but a profound rebalancing of social structure 🤔.

- Google’s Q3 earnings report just showed off the sweet returns from its heavy bet on AI! 📈 Revenue soared past $100 billion for the first time, Gemini monthly active users hit 650 million, and cloud order backlog surged by 46% – almost every business line is cashing in on AI’s dividends. Google processes an astounding 1300 trillion tokens every month, which is 20 times more than the same period last year, proving its AI commercialization is leading the pack industry-wide! 🚀 This Series of Impressive Data injects a serious dose of confidence into AI’s commercial future! 💪

- The “Remote Labor Index” (RLI) has just been unveiled in a new study! 🤖 This benchmark tests AI agents’ performance on 240 real-world freelance tasks—basically a huge skill assessment for AI “workers.” The results show that Manus, currently the top-performing AI agent, only successfully completed 2.5% of projects. But hey, newer models are consistently outperforming older ones, indicating AI’s ability to automate remote work is steadily climbing 📈. Check Out This Fun AI Capability Testing Website to see how far AI is from taking our jobs! (o´ω’o)ノ

Open Source TOP Projects

- Storybook (⭐88.3k) has officially become the industry-standard workshop for UI component development, documentation, and testing! 🎨 It lets frontend developers build and showcase UI components in an isolated environment, massively boosting development efficiency and collaboration. This Powerful Open-Source Tool is an Indispensable Part of Modern Frontend Development , helping teams build more robust and consistent user interfaces! (✧∀✧)

- AI agents’ “memory” problems are finally getting fixed! 🧠 The mem0 (⭐42.2k) project is all about building a universal memory layer for AI agents, and they’ve released OpenMemory MCP for local and secure memory management. This allows AI agents to have long-term memory, just like humans, Maintaining Contextual Coherence and Decision Consistency in Complex Tasks . This is a crucial step towards achieving truly autonomous agents! 🚀

- Tencent has open-sourced WeKnora (⭐6.8k)! 📚 This Large Language Model-driven framework uses the RAG paradigm, focusing on deep document understanding, semantic retrieval, and context-aware Q&A. This project provides powerful tools for processing and understanding complex documents, Enabling Developers to Easily Build Smart Q&A Systems Capable of “Reading” Massive Data . It’s got massive potential in knowledge management and information retrieval! 💡

- In the medical imaging AI space, MONAI (⭐7.1k) is an absolutely indispensable open-source toolkit! 🩺 It provides a wealth of tools and standardized workflows for deep learning research and applications in medical imaging. This project, built by experts from academia and industry, Aims to Accelerate AI Applications and Innovations in Medical Diagnosis , making AI tech better serve human health! ❤️

Social Media Shares

- AI IDEs like Cursor and Windsurf are starting to develop their own code models! 👨💻 This is a big deal, signaling that AI programming tools are hustling to break free from their reliance on upstream model providers and grab more autonomy. With tons of user scenarios and real-world data, AI IDEs, through targeted RL training, totally have the potential to go head-to-head with general large models in the coding arena 🤔. This Trend Suggests That Competition in the AI Programming Field Will Become More Intense and Verticalized , hinting at more “small but mighty” specialized code models popping up in the future! (✧∀✧)

- Viggle’s multi-person tracking and object replacement features are powerful, no doubt, but when the replaced object differs too much in shape from what’s replacing it, you get the hilariously cringe-worthy “uncanny valley” effect! 😂 One user tried swapping Jackie Chan with a cat in “Rob-B-Hood,” and the video’s vibe totally flipped, filled with creepy hilarity 🤣.

This Amusing Failure Case

vividly shows the current limitations of AI video tools when dealing with complex dynamic scenes. Looks like AI has a long way to go for perfect “transformation”! (´・ω・`)

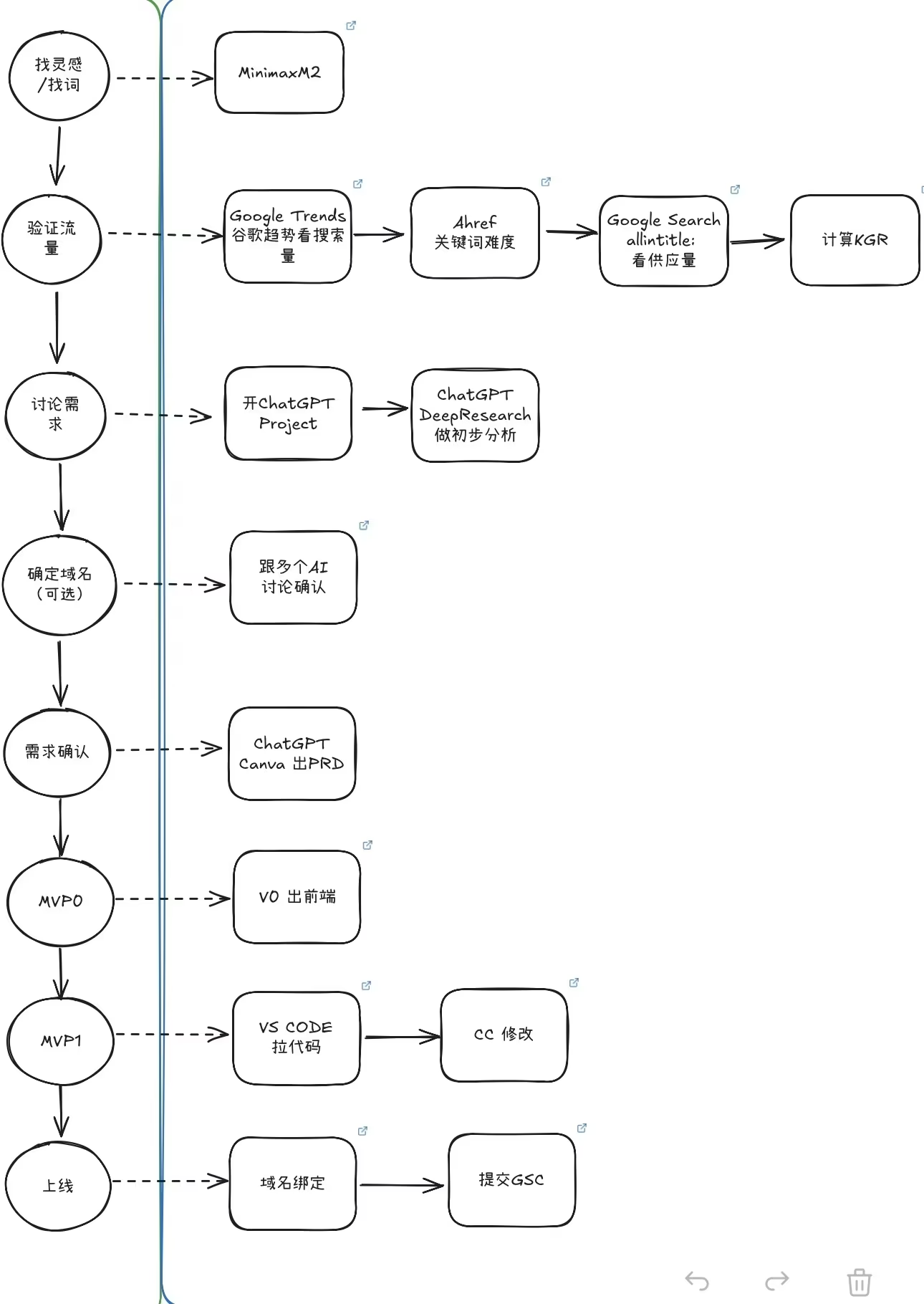

- A Jike user shared his “8 Steps for Product Launch"—a systematic checklist for getting a website or product live! Checklist. It covers crucial stages from domain resolution and server configuration to monitoring alerts and backup strategies 👍. This methodology is incredibly valuable for any developer or team looking to launch an online service, effectively helping them avoid all sorts of “pitfalls” post-launch.

Check Out This Super Practical Launch Guide

to make your product launch process super smooth and reliable! (o´ω’o)ノ

- AI is stepping up to bring some much-needed structure to our messy human thoughts and processes! 🤔 The current systems are a hot mess precisely because humans are messy. AI’s role isn’t just about mimicking intelligence; it’s about tidying up and optimizing chaotic information and processes through algorithms and models. This leads to building systems that are more reliable, easier to understand, and totally auditable 💡. This Perspective Offers a New Dimension for Understanding AI’s Value , essentially seeing AI as the “structuring tool” for human thought! 🧐

AI News Daily Voice Version

| 🎙️ Xiaoyuzhou | 📹 Douyin |

|---|---|

| Laisheng Bistro | Self-Media Account |

|  |

Last updated on